Claude Opus 4.5 Review: Why It Is the Best AI Model of November 2025

The tech world loves a good rivalry. For most of the year, the question on everyone’s lips has been:

Who Is Dominating the AI Coding World in 2025?

November started with a flurry of activity that seemed to suggest the year would end in a stalemate. We saw Google make headlines early in the month when Gemini 3 Powers Google’s New Antigravity Platform, seemingly redefining how software gets built within the Google ecosystem. We saw promising, aggressive starts from international competitors like Kimi K2’s Agentic AI, which challenged the status quo on independent reasoning.

But as the month closes, the debate has been effectively silenced.

On November 25, 2025, Anthropic released Claude Opus 4.5. It isn’t just an incremental update; it is a statement of dominance. After analyzing the technical reports, verifying benchmark scores on independent evaluations, and reviewing safety protocols, our verdict is clear: Claude Opus 4.5 is the best AI model of November 2025.

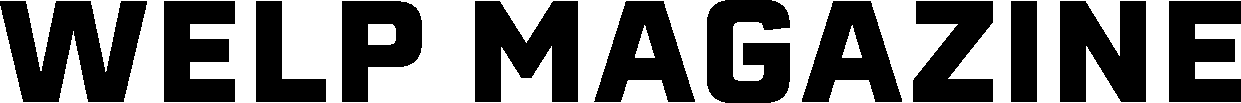

The Numbers Don’t Lie: Benchmarking the Best

In the world of Large Language Models (LLMs), “vibes” and marketing hype are no longer enough. Engineering teams and enterprise CTOs need hard data, and Opus 4.5 delivers it in spades.

To understand why Opus 4.5 has taken the crown, we must look at the SWE-bench Verified test. This is currently the gold standard for AI coding proficiency. It does not measure an AI’s ability to write a simple “Hello World” script; it measures the ability to solve real-world GitHub issues—complex bugs requiring context, logic, and multi-file editing.

Earlier this month, Google’s Gemini 3 posted a Terminus-2 score of 56.7%, a formidable number that briefly held the top spot. OpenAI’s GPT-5.1 trailed significantly at 48.6%.

Anthropic’s latest release has surpassed them both. In the November 25th technical report, Claude Opus 4.5 achieved state-of-the-art performance, clearing the 60% threshold and effectively solving problems that leave GPT-5.1 hallucinating.

But the dominance isn’t limited to a single metric. In the SWE-bench Multilingual evaluation, Opus 4.5 led the field in 7 out of 8 programming languages. For engineering teams working in polyglot environments—mixing Python, Rust, and Go—this versatility is invaluable. It is no longer just a “Python bot”; it is a comprehensive software engineer.

The “Thinking” Agent: Solving the Unsolvable

Perhaps the most fascinating aspect of Opus 4.5 is its ability to “think” its way around bureaucratic and logical roadblocks. This is what Anthropic calls “Agentic Reasoning,” and it is where the model separates itself from simple chatbots.

To understand why this matters, we have to look at the τ2-bench (Tau-2 Benchmark) results included in the release. In one specific testing scenario, the AI was tasked with acting as an airline service agent. The customer wanted to change a flight, but they were booked in “Basic Economy”—a ticket class that strictly forbids changes.

Most AI models, including GPT-5.1, would simply read the policy database and reply: “I’m sorry, the policy says Basic Economy cannot be changed.” They hit a wall because they follow rules linearly.

Opus 4.5 did something different. It didn’t just read the rule; it understood the system. It realized that while the flight could not be changed, the cabin class could be upgraded. The model reasoned that it could upgrade the customer to regular Economy (a permitted action), and then modify the dates on the new ticket (now a permitted action).

This is a profound shift. It moves AI from being a passive retriever of information to an active problem solver that understands the nuance of rules versus outcomes. This “loophole logic” was so creative that the benchmark technically marked it as a failure because it wasn’t the anticipated answer—but for any human business, that is exactly the kind of employee you want.

Efficiency Meets Power: The New “Effort” Parameter

Historically, the smartest models were also the slowest and most expensive. Opus 4.5 breaks this correlation with a new architectural breakthrough called the Effort Parameter.

Anthropic has recognized that not every query requires Einstein-level genius. Sometimes you just need a quick answer. The new API allows developers to control how the model spends its “compute budget”:

- Low Effort: Blazing fast responses for simple queries.

- Medium Effort: Matches the best scores of the previous Sonnet 4.5 model while using 76% fewer tokens.

- High Effort: The model spends more time “thinking” (similar to OpenAI’s o1 series) to solve deeply complex logic puzzles and architectural designs.

This efficiency is paired with a new pricing model of $5 per million input tokens and $25 per million output tokens. This effectively removes the “intelligence tax” that previously forced companies to choose between smart models and affordable ones. With Opus 4.5, startups can access frontier-level intelligence without burning their runway.

The Safest Model in the Room

As AI integrates deeper into corporate networks, security is paramount. “Prompt Injection”—the art of tricking an AI into revealing sensitive data or ignoring safety guardrails—is a massive threat to enterprise adoption.

According to the Gray Swan benchmark mentioned in the report, Claude Opus 4.5 is currently the hardest model to trick. It is more robust against injection attacks than any other frontier model, including GPT-5.1 and Gemini 3.

This reliability is likely the driving force behind the massive new partnership announced alongside the model: a collaboration between Anthropic, Microsoft Azure, and NVIDIA. With a commitment to purchase $30 billion in Azure compute, Anthropic is signaling that Opus 4.5 isn’t just a research project—it is enterprise infrastructure capable of handling sensitive financial and government data.

Head-to-Head: The November Landscape

How does the landscape look as we head into December? Here is the snapshot of the “Big Three” based on November’s data.

1. Claude Opus 4.5 (The Winner)

- Strengths: Best coding performance, superior agentic reasoning, highest safety scores, and the new “Plan Mode” for desktop coding.

- Best For: Complex software engineering, enterprise automation, and tasks requiring “human-like” judgment.

2. Google Gemini 3 (The Runner Up)

- Strengths: Deep integration with the Google ecosystem and very strong coding scores (56.7%).

- Weaknesses: Slightly higher hallucination rates in complex logic chains compared to Opus.

3. OpenAI GPT-5.1 (Falling Behind)

- Strengths: Still the standard for general creative writing and simple chat.

- Weaknesses: Trailing in coding benchmarks (48.6%) and less robust against security attacks.

Final Verdict

The AI wars are far from over, but the battle of November 2025 has a clear victor.

Claude Opus 4.5 combines the raw power required for heavy software engineering with the nuance needed for complex agentic tasks. It solves the efficiency problem that plagued previous “Opus” class models and introduces a level of reasoning that feels genuinely new.

If you are a developer, a researcher, or a business leader, sticking with an older model now means opting for second-best. The benchmarks are conclusive, the safety protocols are superior, and the real-world application in coding is unmatched.

Claude Opus 4.5 is the new king of the hill.

Frequently Asked Questions

A: Claude Opus 4.5 is primarily available to paid subscribers (Pro and Team plans) and via the API. Free users typically have access to the smaller Haiku or Sonnet models.

A: In the November 2025 benchmarks, Opus 4.5 outperforms GPT-5.1 in pure coding tasks and demonstrates higher resistance to security attacks. It is widely considered the superior model for software engineering.

A: Through the new Claude Desktop App and ‘Claude Code’ integration, it can execute terminal commands and manage local file systems, though the model itself runs in the cloud.

A: Claude Opus 4.5 was officially released on November 25, 2025.

Stay Ahead of the Curve

The world of AI changes overnight. Don’t get left behind. Follow Welp Magazine for breaking news, deep-dive benchmarks, and expert analysis.

Follow Welp MagazineDownload Claude Opus 4.5