Big data is gaining traction across various industries, with experts projecting its market share to reach $268.4 billion by 2026. One of the biggest advantages of big data analytics is the high quality of analytics which allows businesses to better understand their customers’ needs, preferences, or behavior, for in-depth promotional insights while saving on additional marketing costs.

And to optimize the analytics, most companies simply apply for consulting services to contractors with a fresh perspective and the necessary experience.

However, the million-dollar question remains, can big data be affordable to all players, including small businesses? Small businesses have always been sidelined in this technology because they don’t enjoy the same economies of scale as tech giants. Fortunately, the concept of big data is increasingly becoming more accessible and affordable across the board. Here is the current outlook.

Maturity of Big Data Technologies

The number of globally connected IoT devices keeps growing and now stands at 14.4 billion. The increasing demand for these applications has seen rapid innovation in big data technologies. Today, businesses can build a simple programming model using the Hadoop framework to store and process distributed data.

The industry has seen the rise of R Programming, an open-source big data technology that has been instrumental in unified development environments. Besides visualization, this programming language is also used to code statistical software, as well as run data analyses.

Other technologies that are marking the maturity of big data in terms of accessibility and affordability include:

- Predictive Analytics: Businesses can now leverage predictive analytics, a popular big data tech for forecasting future marketing trends and insights.

- TensorFlow: Businesses and R&Ds that invest in machine learning (ML) find TensorFlow useful as it is packed with scalable development tools and libraries.

- Blockchain: Blockchain is an upgraded edge computing technology that brings decentralized servers into play.

- Kubernetes: Kubernetes is Google’s open-source big data tool that offers a solution for container and cluster management.

Prevalent Types of Big Data Technologies

Through the last decade Big data technologies have evolved into several varieties allowing industries to focus on options that match their intricate needs. The prevalent varieties of big data technologies with associated business tools include:

Data Storage

Big data technologies that handle information storage can gather data from multiple sources and sort it for seamless management. The most widespread big data storage tools that also facilitate simultaneous access include MongoDB and Apache Hadoop. As a NoSQL database coded in JavaScript, C, and C++ programming languages, MongoDB offers a shorter learning curve for businesses that want to store voluminous data sets. Apache Hadoop on the other hand is open-source software that minimizes bugs in structured, semi-structured, and unstructured data sets.

Data Mining

Easy-to-use data mining tools, such as Presto and Rapidminer are enabling businesses to query structured and unstructured data for meaningful insights. Presto is helpful when it comes to running analytic queries against large data sets, while Rapidminer is prevalent in designing predictive models.

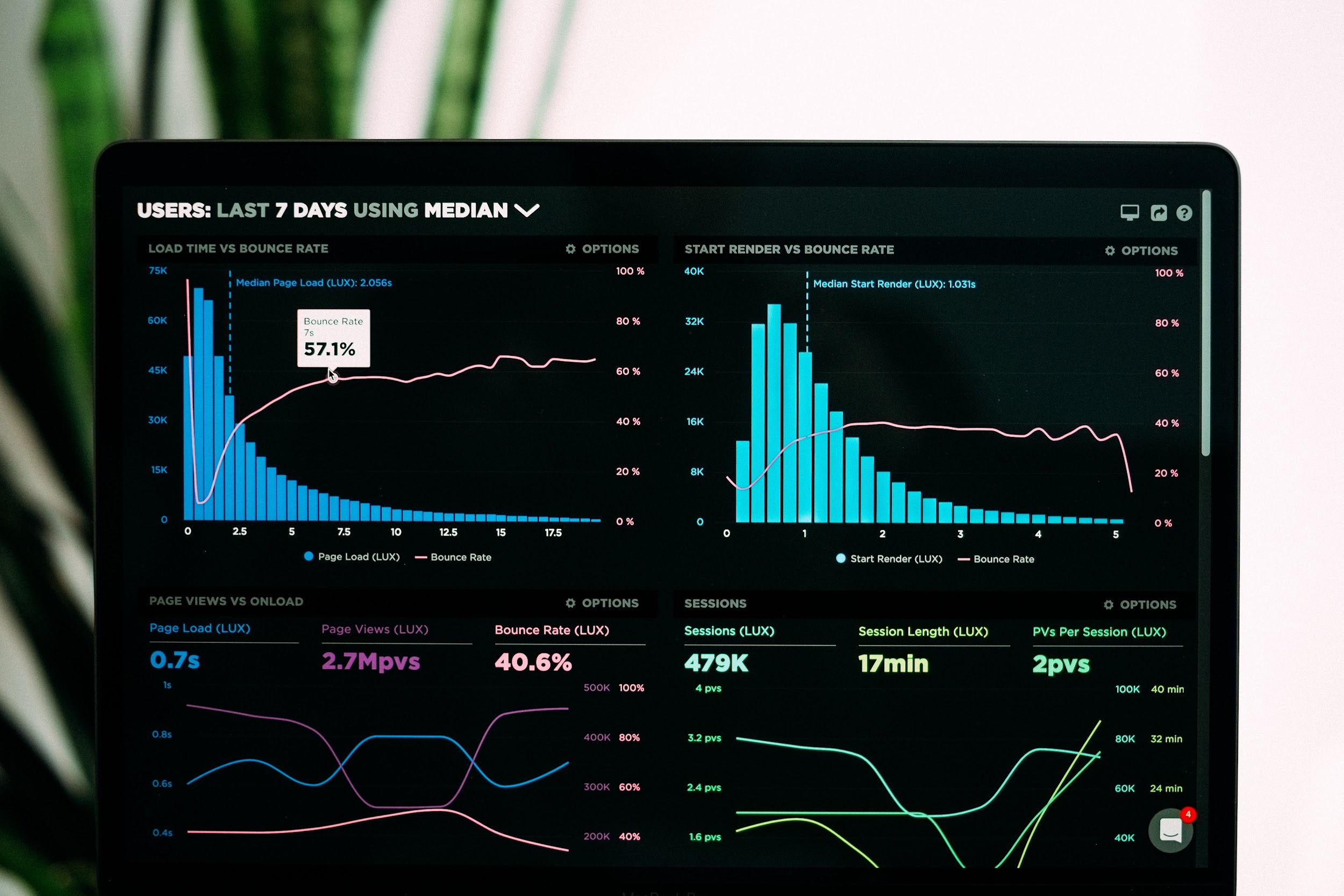

Data Analytics

Big data analytics tools have gained popularity when it comes to driving key business decisions. Tools, such as Splunk and Apache Spark are enabling businesses to clean and transform data into actionable insights. Apache Spark for instance leverages a random-access memory (RAM) to process a wide variety of queries simultaneously as opposed to batches, making it faster than Hadoop. Splunk is generally used alongside artificial intelligence (AI) to generate graphs and dashboards.

Data Visualization

Lastly, development and marketing teams need to present big data findings to the rest of the company and its shareholders. This need has seen the rise of big data visualization tools, such as Looker and Tableau. Looker is dubbed a business intelligence tool for its powerful analytical capabilities. Tableau on the other hand can easily generate bar charts, Gantt charts, pie charts, or even box plots for real-time sharing and presentation.

The Relatively Affordable Cost of Big Data

Cloud solutions, such as Amazon Web Services (AWS) are revolutionizing the cost-effectiveness of big data technologies. AWS features on-demand instances, allowing small businesses to budget for the used compute capacity, by the hour. With this approach, clients can enjoy greater performance and capacity flexibility without any upfront commitment.

Alternative cost-effective cloud solutions that facilitate big data applications include Azure and GCP. Azure offers a reliable and scalable solution with automation and monitoring capabilities. In contrast, GCP provides a relatively affordable solution for custom cloud-based instances computing but the costs can add up quickly in accelerated instance computing.

Development Costs

Cloud technologies are optimizing the development costs and efforts of big data applications. For instance, a serverless stack features a host of reusable components, allowing consistency when it comes to architecture development. At the same time, taking the serverless stack approach also means seamless or less-demanding integration and end-to-end testing. Isolated sandboxes can be played, iterated, or fixed independently to mitigate development loopback.

Also, general development costs are optimized by automation during deployment. Deployment automation means businesses can release products faster and more frequently. Even better, automation offers immediate feedback for as much iteration as possible in the subsequent upgrades.

Ongoing and Maintenance Costs

Maintenance costs on the business side are relatively affordable, given that cloud service providers have doubled up on system monitoring to ensure performance and production reliability. However, clients still have to worry about certain maintenance areas, including:

- Software bugs mitigation: Software bugs, although rare, can mean relocating applications that experience computational outages to a different clone cluster, which can be time-consuming.

- Infrastructure outage mitigation: IT departments can sometimes encounter accidental or computational outages. However, the service provider always resolves this technical hitch.

- Ongoing activities: the frequency of ongoing activities, such as scaling and upgrades determine the maintenance costs incurred in the long haul.

The Cost of Implementing Big Data

Change can be daunting, but is often inevitable, especially in the field of innovation. Inasmuch as shifting to cloud and big data technologies might come with added tasks, such as data migration, the overall process can be optimized to meet specific budget needs. Moreover, big data technologies can be built as decoupled, allowing developers to implement local changes without interfering with the whole production process.

Wrapping It Up

The bottom line is that big data can be relatively affordable to a greater extent. The evolution of big data technologies is bringing newer design patterns and approaches. This enables developers to build and deploy any cloud-native application with fewer requirements and at a cost-efficient approach.